An AI that can make music.

An AI that can make music.

I was casually sitting on my bed and being sleepy today when suddenly I got this message:

And as expected I googled it and boy was I shocked!!

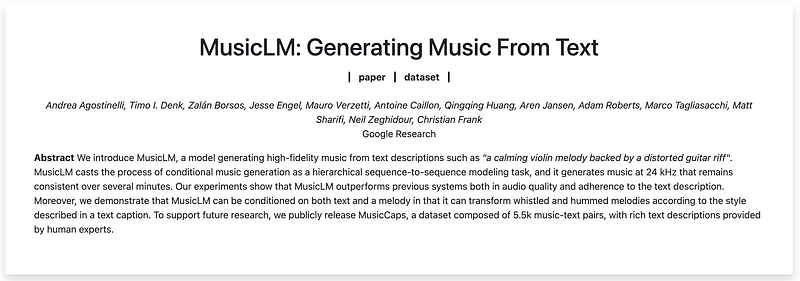

This is what I was greeted with when I googled it. From the title only I knew what I am gonna face.

I went through the research paper and I was blow away. This thing is awesome.

Lets start with what is it ??

MusicLM is an AI model for generating music from text descriptions developed by Google Research. It uses a hierarchical sequence-to-sequence modeling approach to generate high-fidelity music that remains consistent over several minutes. The model is capable of generating music from text descriptions, such as “a calming violin melody backed by a distorted guitar riff”, and can even be conditioned on both text and a melody. This research provides a significant advancement in the field of AI music generation and has the potential to greatly impact the music industry.

How does it work ??

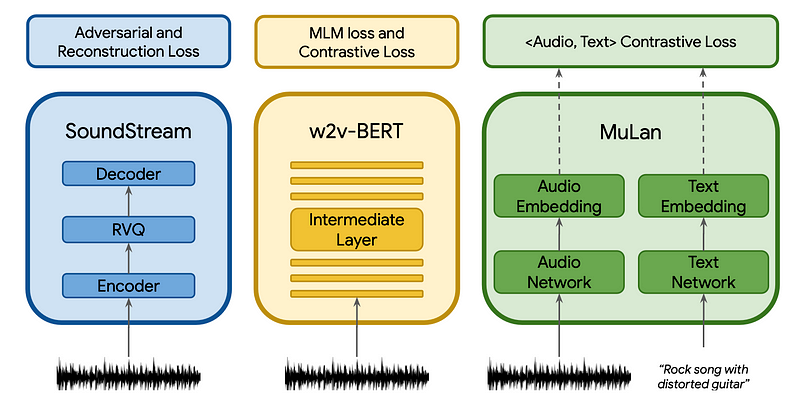

MusicLM is a machine learning model that can generate music given a text prompt. The model has two parts: (1) the representation and tokenization of audio and text, and (2) the hierarchical modeling of audio representations.

In the first part, the model uses three different models to extract audio representations. The first model, SoundStream, uses self-supervised audio representations to provide high-fidelity music synthesis. The second model, w2v-BERT, uses semantic tokens to help generate coherent music over a long period of time. The third model, MuLan, is used to represent the music given the text prompt.

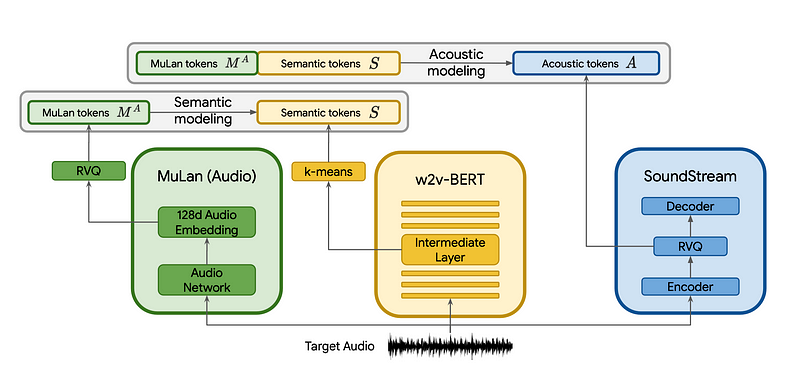

In the second part, the model combines the discrete audio representations from the first part and generates music in two stages. In the first stage, the model maps the MuLan audio tokens to the semantic tokens. In the second stage, the model generates the acoustic tokens given the MuLan audio tokens and the semantic tokens. This generation process is done by using a decoder-only Transformer for each stage.

At inference time, the model uses the MuLan text tokens computed from the text prompt as a conditioning signal and converts the generated audio tokens to waveforms using the SoundStream decoder.

How will it impact the world ?

The potential impact of MusicLM on the world of music could be significant, as it has the ability to automate certain tasks related to music creation and analysis. This could lead to new and more efficient ways of making and distributing music, as well as provide new insights into musical trends and patterns. However, it is also important to note that the development of AI-generated music raises ethical and creative questions, such as the extent to which AI can truly replicate human creativity. The ultimate impact of MusicLM on the world will depend on how it is used and the direction of future research and development in the field of AI and music.

I seriously cant wait to see what wonders AI will do. Great start for AI on 2023.

All references of this article can be found here:

For more AIML stuff follow me on Twitter:

Thanks For Reading 😁, See Ya Guys Next Week.👋🏼