We made an AI to Manage Street Dogs

We made an AI to Manage Street Dogs

Discover amazing ML apps made by the communityhuggingface.co

So, my college has this thing called Project Exhibition. Its basically a subject that forces you to make a good projects to show off while your placements.

We were a team of 5 (https://twitter.com/actual_aviral , https://twitter.com/sakshiisawarkar ,https://twitter.com/SannidhyaSriva2 ,https://twitter.com/Suraj_p__10) and we all are from India. India has a rising problem of street dogs. Like every few days some big commotion is caused by street dogs in areas and so we thought of making a project to fix this problem to an extent.

So what should we do?

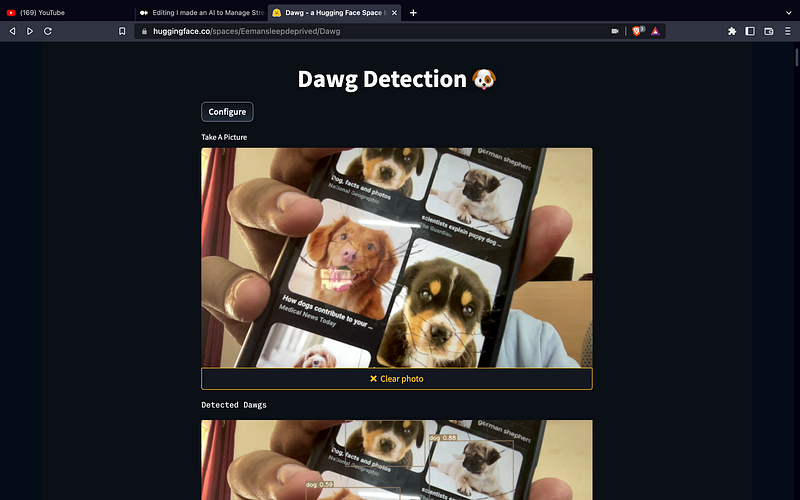

We wanted to make reporting dogs more accessible and we came up with a an web/application concept that will allow you to click images of dogs on your area after you click the image our custom trained yolov7 model will then recognise the number of dogs in the image and keep a database of that number. The webpage/application will also take your ip location as the location of where the dog is being reported from. If we find that the number of reports from an area is very high (<50) then we will automatically report the dogs to be collected to the nearest municipal co operation available there.

What was timeline?

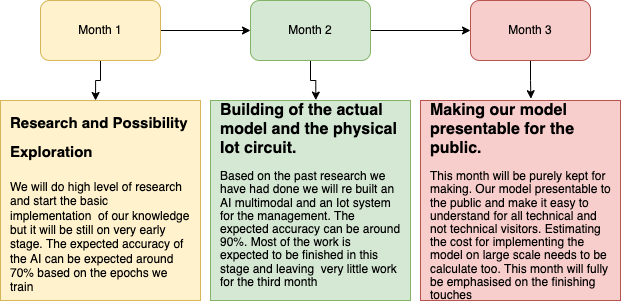

See, so basically our college gave us 3 months to make this project both literature and technically.

We did it on last 3 days before the submission🙂. It just took 3 days of no sleep. Ahhhh Pain.

As you can pretty much understand we didn't have a timeline. All we did was speed run.

But yes we had a time line in mind and here is it :

The UI/UX?

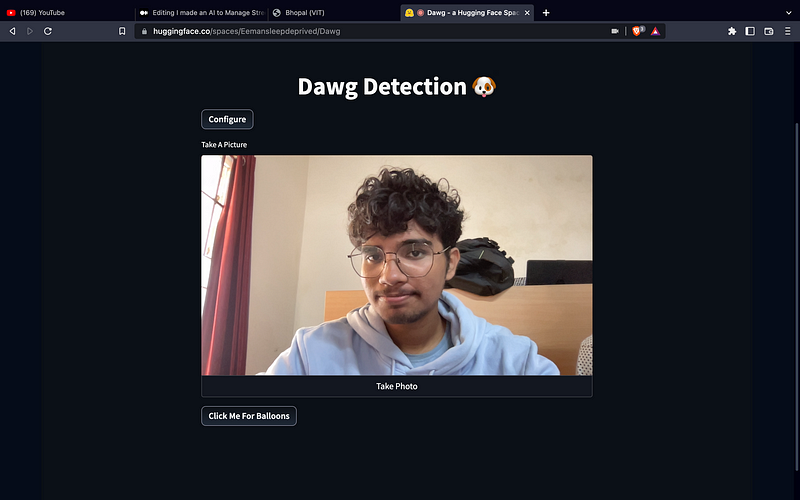

Ok so my amazing friend (https://twitter.com/ParthKalia_) told me about streamlit like I knew about it but didn't know that you can make so good quality UI with just single lines of code.

And I jumped into Streamlit:

And Bam we have the UI.

Like literally this all was less than 20 lines of code.

How does it work?

See, I cant show you the code to this project cuz this can become a product later but you can still see a part of the code in our hugging face spaces.

But lets go through the working of the app

Then the CNN will basically segment the whole image into square blocks and try finding inference of dogs. If it finds patterns that match dogs it will make counters in that part of the image and detect dogs.

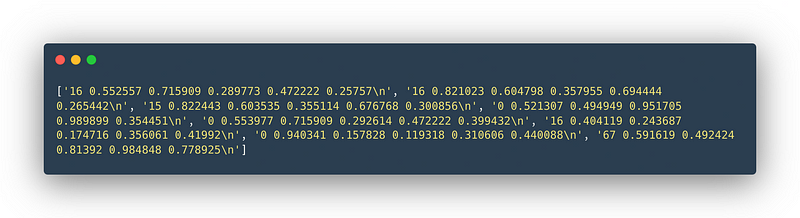

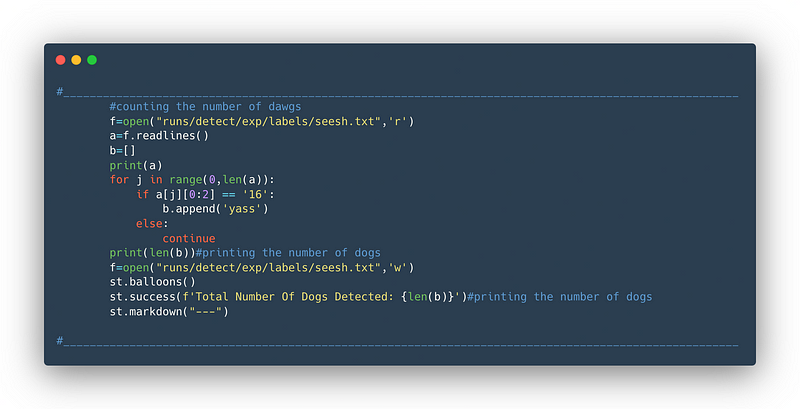

So, now we have to count the dogs and how should we do it? Yolo basically has a feature to save all the detected things with the accuracy in a text file.

So this is the text file that gets generated in a list format. In yolo 16 represents dogs. so all the elements starting with 16 are dogs.

After that I just count the elements with the first two letters 16 and count them.

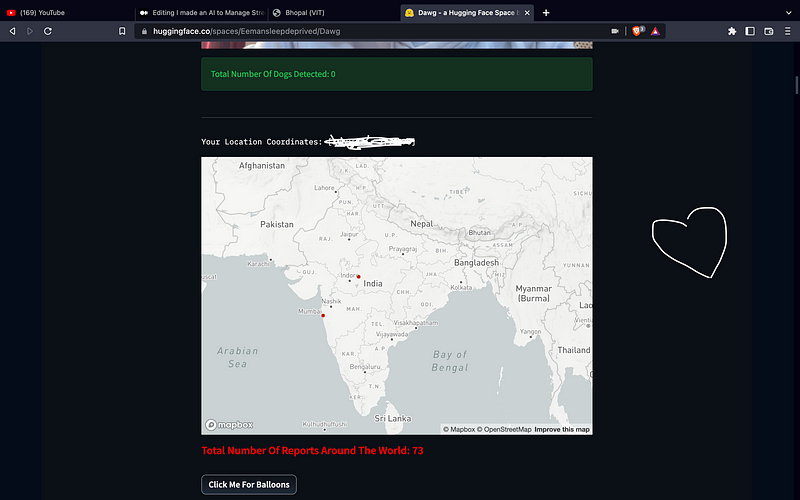

and show the number of dogs to the user as such:

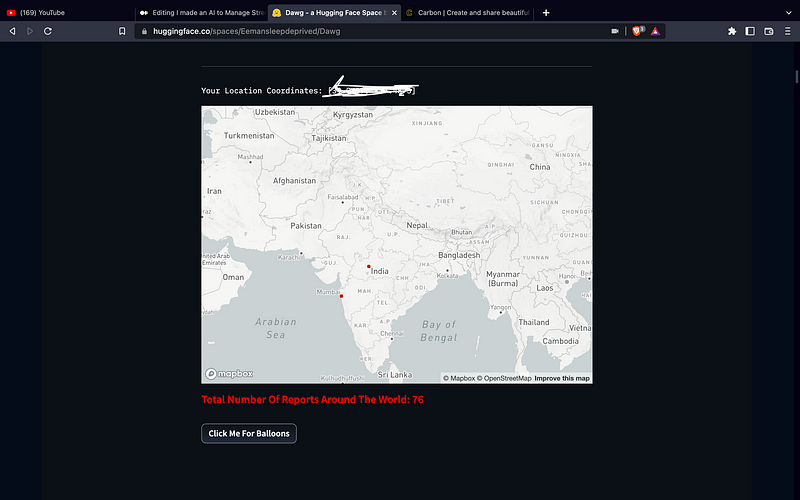

After that we get the location of the user using there IP and plot it in the map

after that I checking how many reports I am getting from an area. If the number of reports is more than 50 then we will report authorities to collect the dogs.

This is just a prototype and features will be added later with time.

Here is the webpage if you wanna try yourself:

Discover amazing ML apps made by the communityhuggingface.co

For my Github go here:

For my day to day AIML updates follow me twitter:

Thanks For Reading 😁, See Ya Guys Next Week 👋🏼