Making an AI to enhance images with 0% loss rate using the ESRGAN model

Making an AI to enhance images with 0% loss rate using the ESRGAN model

So, In my last article I made an AI to generate art using CLIP and GPT-3 check it out here:

So, my best friend is very talented in drawing and making art in over all. So, last time we were talking about a…medium.com

It generated beautiful Art but not exactly the most crisp images. So I thought lets make an AI for it. And here we are.

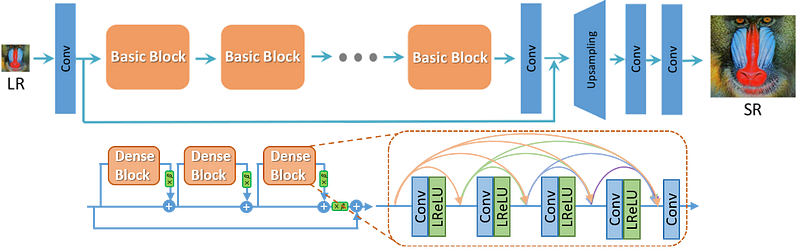

So, upon some research I found a model called ESRGAN ( Enhanced Super-Resolution Generative Adversarial Networks ). Its a super cool model used to enhance images to “super resolution”.

Its working is pretty simple you can check it out in there very detailed article: Here.

So, without any delay let's start the code and Google Colab with Chrome is needed to run this code and in GPU runtime mode.

Lets start by downloading the required libraries:

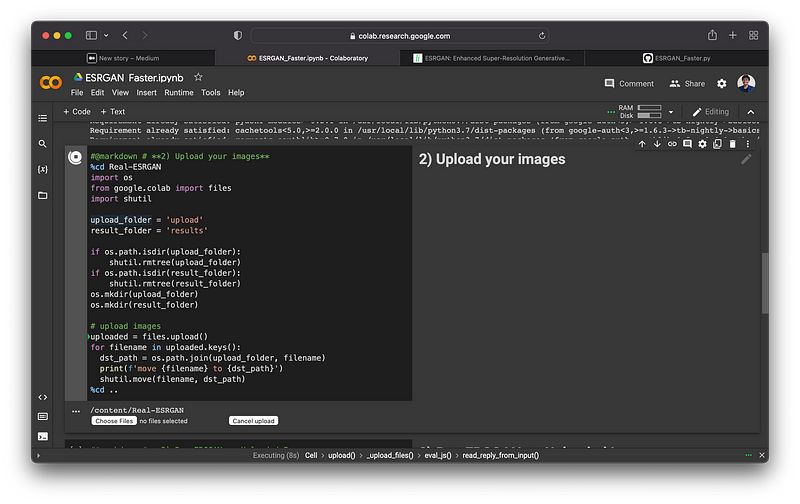

Lets make the image uploading option and adding it to the cloud for processing:

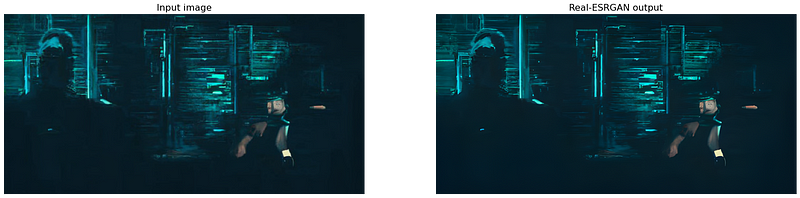

The image we will be running ESRGAn today is this:

As you can see the Generated art is quite hazy so to fix that lets run this through ESRGAN.

Now lets run ESRGAN on the uploaded image:

Now it all done lets see the result in an before and after format using this code block:(Optional not necessary for the code)

The Output is phenominal:

As you can see for your self the output image is much sharper and higher quality.

And there is absolutely no loss in the image quality instead there is an increase in the quality:

For downloading the generated image just add this code block:

And with that the code is done.

If you liked this article a follow in medium is appreciated 😁

For the Code here is the notebook:

For more check out my GitHub:

You can't perform that action at this time. You signed in with another tab or window. You signed out in another tab or…github.com

Follow me on Twitter for my day to day AIML research updates:

Thanks for reading 😁, See ya guys next week👋🏼.