Teaching your AI / Chatbot to recognise hand gesture using OpenCv, TensorFlow and MediaPipe.

Teaching your AI / Chatbot to recognise hand gesture using OpenCv, TensorFlow and MediaPipe.

Ever want your AI to interact with deaf people or fist bump you when you want. Well actually is very easy to do like in about 25 lines of code it can be done.

For Staters you will need a Deep learnig based AI/Chatbot. If you don't have one setup read my article on how to make one:

Firstly lets get started with the imports:-medium.com

After that's setup, lets get through some theory. Blah! I Know it's boring I will keep it small.

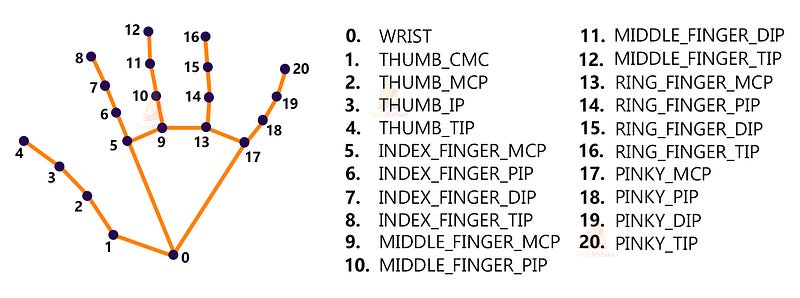

Basically we will use media pipe to detect hand and the hand points. It will give exactly a total of 21 keypoints they are:

Theory part over. Phew!

Stuff needed for this project:

1. Python — 3.x (we used Python 3.8.8 in this project)

2. OpenCV — 4.5

- Run “pip install opencv-python” to install OpenCV.

3. MediaPipe — 0.8.5

- Run “pip install mediapipe” to install MediaPipe.

4. Tensorflow — 2.5.0

- Run “pip install tensorflow” to install the tensorflow module.

5. Numpy — 1.19.3

Lets start with the imports:

Initialising MediaPipe:

Mp.solution.hands module performs the hand recognition algorithm. So we create the object and store it in mpHands.

Initialising TensorFlow:

Lets read frames from a webcam input using OpenCV:

Now, actually getting to hand detection and keypoints finding:

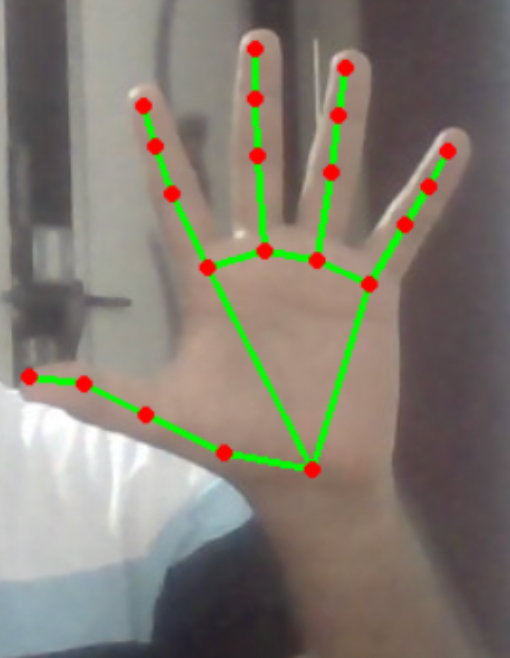

Now our code can successfully detect hand keypoints:

Now lets add the hand gesture detection part:

And just like that the code is done!

Now let's make this into function and import it to our AI/Chatbot called DEW.

For making it a function:

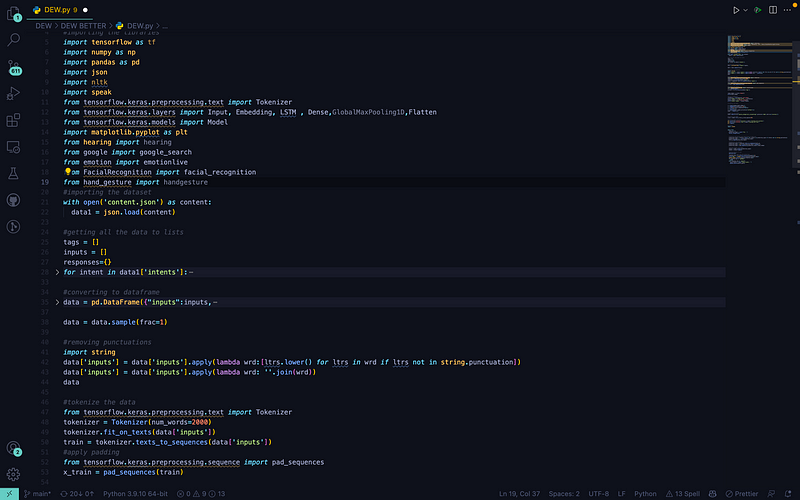

Adding it to DEW:

The Output:

If you liked the article a follow is appreciated.

For more check out my GitHub:

You can't perform that action at this time. You signed in with another tab or window. You signed out in another tab or…github.com

For the day to day updates of my AIML research follow me on twitter:

Thanks for reading 😁

See ya next week.